API Days Australia 2024

An engineer’s perspective on the hard-earned lessons, risks, and realities of building with LLMs.

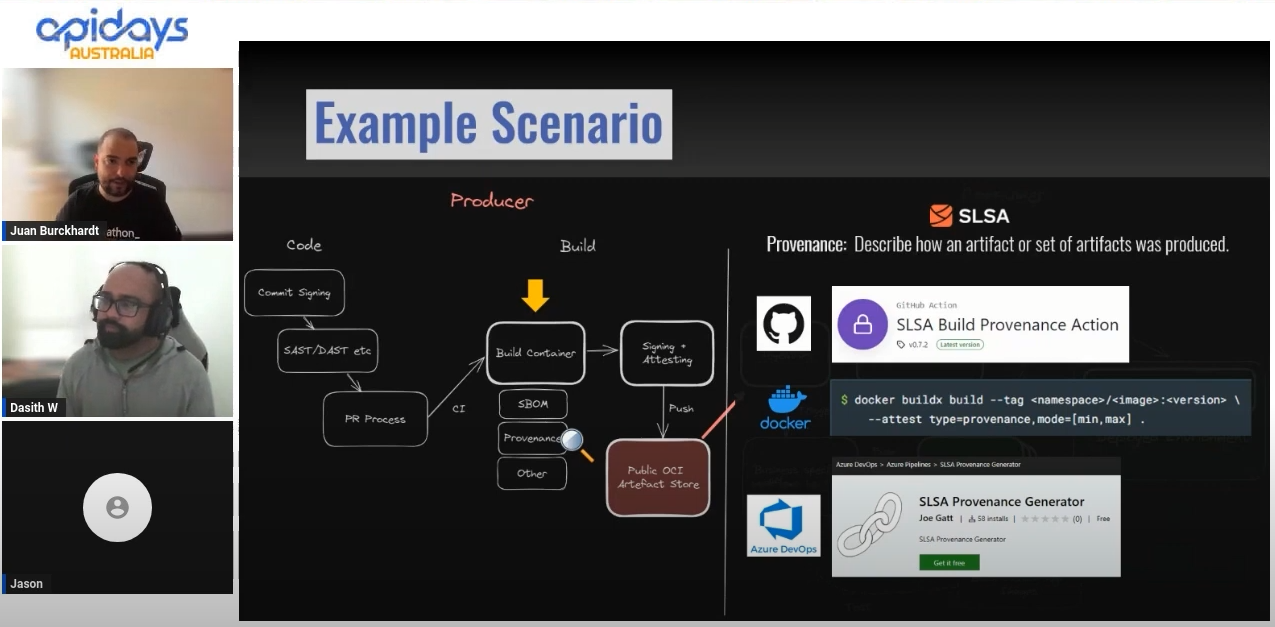

API Days & K8S Meetup 2023

Looking at the modern software supply chain security landscape. #notation #cosign #sbom #slsa #oci1.1

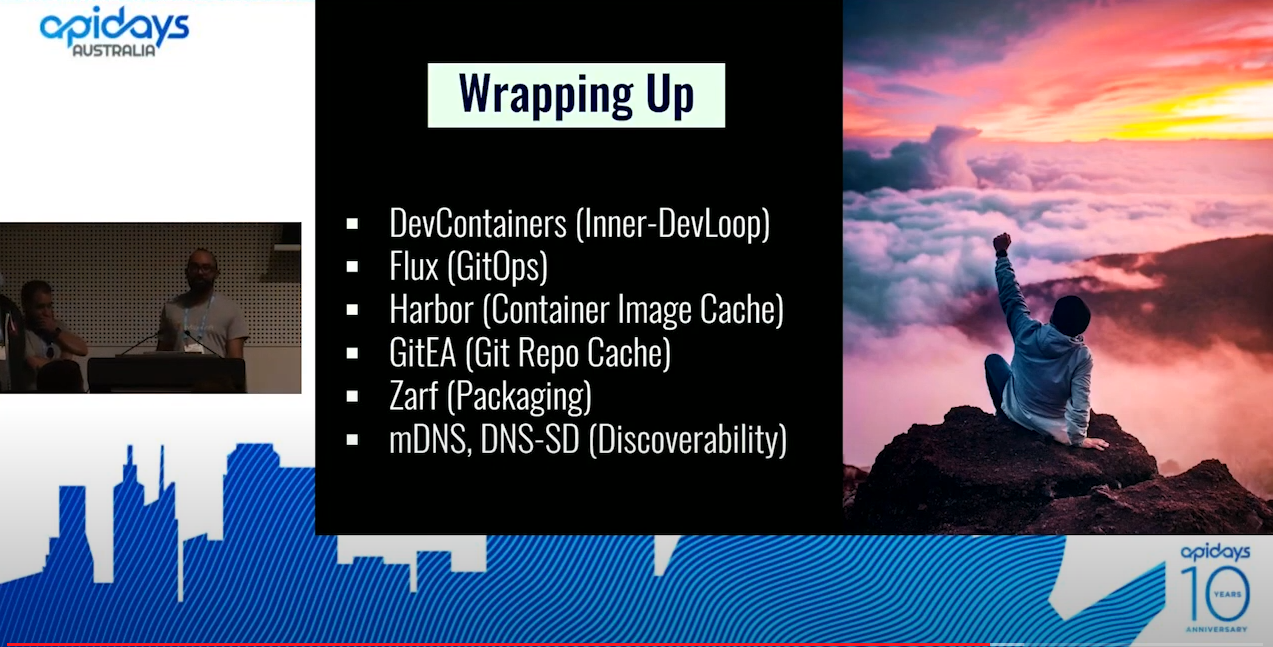

API Days Australia 2022

My team’s presentation on our learnings about EdgeDevOps can be found here.

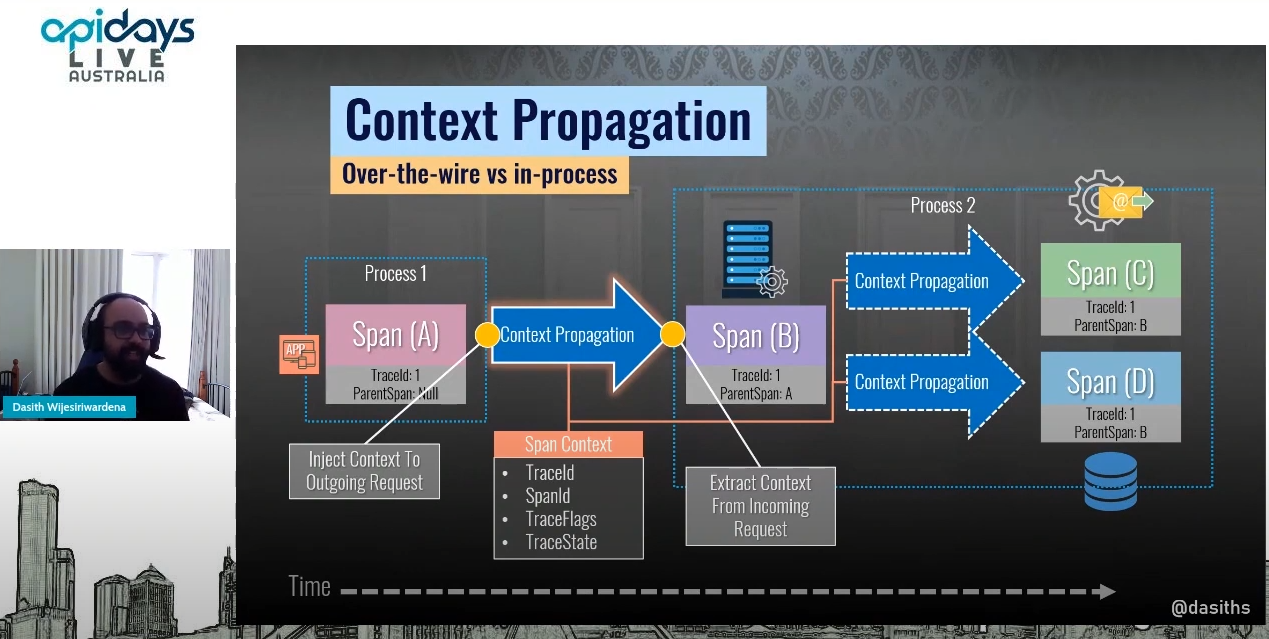

API Days Australia 2021

A live recording and slides from my session about distributed tracing and OpenTelemetry can be found here.

API Days Jakarta 2021

A live recording and slides for my talk “The Shell Game Called Eventual Consistency” are here.

NDC Sydney 2020

A replay of “Not All Microservices Frameworks Are Made The Same” and slides are here.

API Days Live 2020

You can find a live recording of the talk I did about “building distributed systems on the shoulders of giants” here.

API Days Melbourne 2019

You can find the the slides and an extended overview of the topics I covered during my talk on Microservices here.

DDD Melbourne 2019

You can find the video recording, abstract and slides here for my talk on Modern Authentication. I covered most OAuth flows and OpenID Connect in this session.

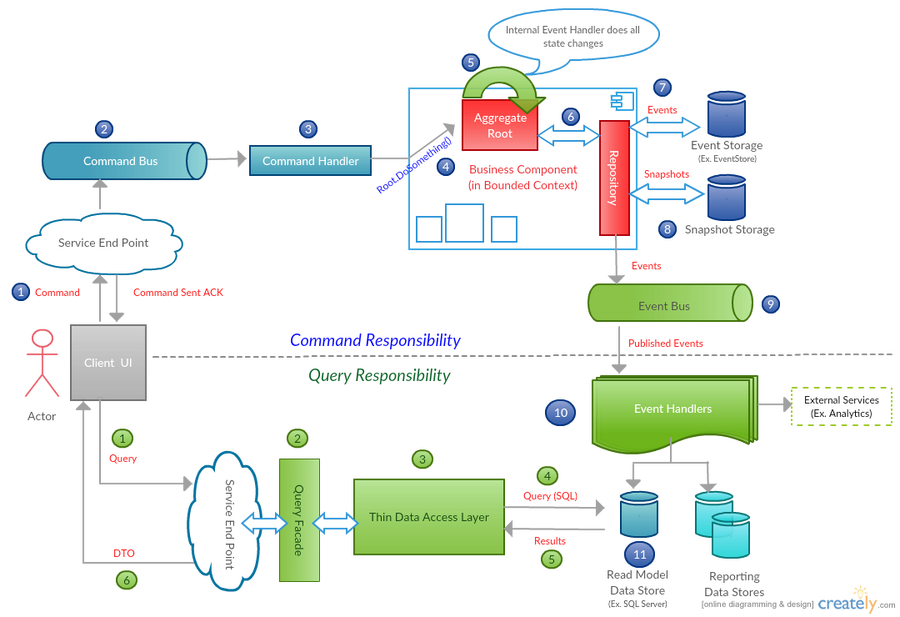

#LevelsConf2018

I recently spoke about Event Sourcing at the inaugural LevelsConf 2018. You can find the abstract and slides here.

SimpleEndpoints

A simple, convention-based, endpoint per action pattern implementation for AspNetCore 3.0+

NimbleConfig

NimbleConfig is an open source configuration injection library for .NET with full support for ASP.NET CORE. It allows you to inject configuration settings in a very simple and testable way.

NEventLite

NEventLite is an open source Event Sourcing library for .NET that can get your ES+CQRS project up and running quickly and hassle free. It helps you manage the aggregate lifecycle and supports many event storage providers.

Continue to read my recent posts here.